The two problems below only occur in when using the API with R.

Everything works well when using Eikon Workstation of the Eikon Excel add-inn.

#PROBLEM 1

#get_timeseries() cuts off timeseries - irregularly

#Example tickers: IBM (17 Mar 1980), MSFT (13 Mar 1986), .IBLEM0002 (31 Oct 2010)

#Full historic data is available on Eikon and on the Excel-Eikon for all tickers

#Prepare

library(devtools)

library(eikonapir)

library(tidyr)

eikonapir::set_proxy_port(9000L)

eikonapir::set_app_id('Put your API Key here')

#Create tickers

tickers = c("MSFT.O","IBM",".IBLEM0002")

tickers = c("MSFT.O",".IBLEM0002")

tickers = c("IBM",".IBLEM0002")

tickers = c(".IBLEM0002")

#Create tickers input list

ticker.list = vector("list", length(tickers))

ticker.list[1:length(tickers)] = tickers

#Timeframe

startDate = "2003-01-01T00:00:00"

endDate = paste("2020-03-27","T00:00:00",sep="")

#Get prices

p.out = get_timeseries(

rics = ticker.list,

fields = list("TIMESTAMP","CLOSE"),

start_date = startDate,

end_date = endDate,

interval = "daily")

names(p.out) = c("Date","Close","Tickers")

#Convert from stacked to wide format

p.out = spread(data = p.out, key = Tickers ,value = Close)

names(p.out) = c("Date",tickers)

head(p.out,3)

tail(p.out,3)

#RESULTS - different timeseries start dates depending on ticker combination

#If only .IBLEM0002 is included then timeseries gives the full series

#If only MSFT.O or IBM are included then timeseries is cut off 2009-04-29

#If tickers = c("MSFT.O","IBM",".IBLEM0002"), ".IBLEM0002" starts 2016-03-11 and MSFT, IBM later

#If tickers = c("IBM",".IBLEM0002"), ".IBLEM0002"starts 2016-03-11 and MSFT later

#If tickers = c("MSFT.O",".IBLEM0002"), ".IBLEM0002"starts 2014-03-05 and IBM later

#PROBLEM 2

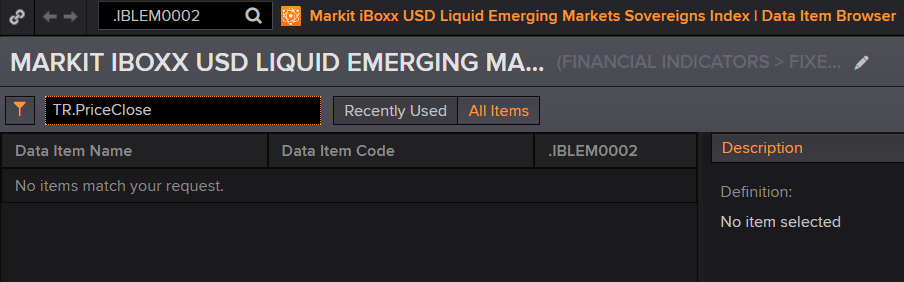

#get_data get full timeseries for et IBM, MSFT.O BUT nothing for .IBLEM0002

#Full historic data is available on Eikon and on the Excel-Eikon also for .IBLEM0002 (start in 2010)

startDate = "2003-01-01"

endDate = "2020-03-27"

p.out = get_data(

instruments = ticker.list,

fields = list("TR.PriceClose.Date","TR.PriceClose"),

parameters = list("Frq"="M","SDate"=startDate,"EDate"=endDate))

names(p.out) = c("Tickers","Date","Close")

p.out = spread(data = p.out, key = Tickers ,value = Close)

head(p.out,3)

tail(p.out,3)

I need to download the full historic timeseries for a range of different ticker types. Please let me know if there is an error in my formulas or if there is a different more consistent approach.

Thank you very much in advance